AI Trust Gap

A survey exploring the American public's perceptions of and trust in artificial intelligence.

AI may be transformative, but it has the potential for misuse. We’ve seen bias. Deepfakes. And attacks on facial recognition systems.

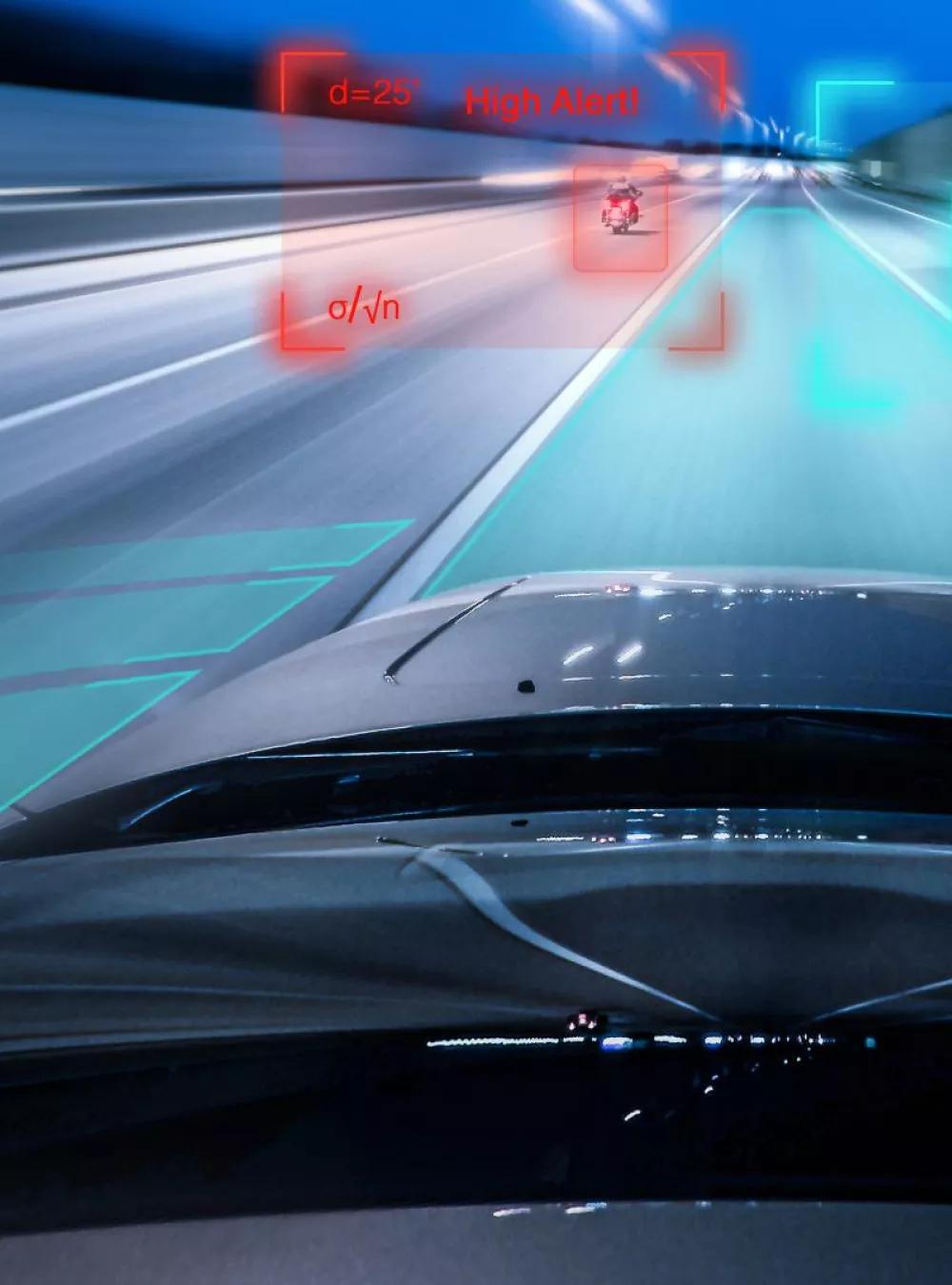

Artificial intelligence (AI) technologies and frameworks could radically boost efficiency and productivity in nearly every field. It can enable better, faster analysis of imagery in fields ranging from medicine to national security. But if consumers don’t trust AI, adoption may be limited to less-consequential tasks, such as recommendations on streaming services or contacting a call center in the search for a human.

We collaborated with The Harris Poll to better understand Americans’ perceptions of AI and inform our work. MITRE is convening government, industry, and academia to develop frameworks and technologies that can make AI solutions safer and effective

Our survey findings can inform decisions about responsible development and deployment of AI systems including:

- Public concerns to address

- Areas for increased investment

- Potential for collaboration across industry, government, and academia

- Opportunities to increase trust through education

Understanding Americans' perceptions of AI can help inform government and industry R&D priorities.

Key Findings

- 82% believe AI should be regulated

- 78% are very or somewhat concerned about AI being used for malicious intent

- 37% are comfortable with government agencies using AI to make decisions that directly affect them

- 35% are comfortable using AI for autonomous rideshares

only

48%

of Americans believe

AI is safe and secure

Malicious intent

Most Americans worry about the safety and fairness of AI systems. These worries grow when they consider AI’s role in important functions like self-driving cars, government benefits processing, or online health chatbots. Their concerns are understandable. We’ve seen news reports about AI systems that can be tricked or corrupted. Others are biased or simply ineffective. We learned with software and cybersecurity that you must address concerns from the beginning, not as an afterthought.

We need to address these issues with AI now. MITRE is developing advanced solutions in various areas and new assurance approaches and tools.

We are convening government, academia, and industry to address these implications and ensure the responsible use of AI for public good.

We need to address AI security issues now.

Building trust

The technology for autonomous vehicles is here, but few Americans are ready to travel in them. The survey findings show that Americans want industry to do more to address the use of AI for things like deepfakes and sophisticated cyber attacks.

The cybersecurity field has examples including information-sharing organizations and vulnerability disclosures where industry collaborates, rather than competes, on security. AI assurance calls for collaboration as well. Better AI assurance will make it more likely consumers become comfortable adopting new solutions that industry develops.

Collaboration

The survey data points to the need for whole-of-nation collaboration on research and development around AI technology challenges.

The data also illustrates that people who are more familiar with AI, like tech experts, are more likely to trust it for consequential tasks. This presents an opportunity for government and industry to work together and increase AI education efforts to earn the general public’s trust.

Missed Opportunities

We’ll just scratch the surface of AI’s potential if people will rely on it only for purposes like entertainment recommendations. In fact, AI may be one of our most important tools to tackle the hardest problems facing us, from climate change to healthcare to national defense. Distrust of these technologies will, at a minimum delay, and possibly prevent us from realizing transformational benefits like dramatically improved patient outcomes and far safer roads and skies.

If users believe that AI solutions are not only effective but feature similar protections, they’re more likely to be comfortable using these solutions themselves and seeing them employed by others.

Trust drops as AI applications become more consequential.

Regulation

The survey showed Americans embrace both rights and regulations for AI. As the U.S. has developed technology that has transformed sectors like healthcare and transportation, government and industry have worked together on consumer protections. AI is no different. The proposed AI Bill of Rights and the recently published NIST AI Risk Management Framework are useful starting points. The NIST framework was developed in collaboration with the private and public sectors; it targets better management of risks to individuals, organizations, and society.

If users believe that AI solutions are not only effective but feature similar protections, they’re more likely to be comfortable using these solutions themselves and seeing them employed by others.

Americans believe AI can assist, enhance, and empower, but they don’t yet trust it. Tech experts also express concern, and both support government regulation.

8-in-10

Americans believe

AI Technologies

should be regulated.

Where MITRE Comes In

MITRE is developing advanced AI solutions in areas ranging from combating tax fraud to improving air traffic management. We’re also developing new AI testing tools to help our federal government sponsors understand whether new offerings deliver on their promise. And we’re leveraging MITRE’s cybersecurity expertise to develop tools to address the security implications of this technology.

We’re co-leading the Coalition for Health AI (CHAI) to develop open, transparent, and bias-free tools to set standards in collaboration with the Mayo Clinic, Duke University, industry, and federal government observers.

By working together, we can enable responsible pioneering in AI to better impact society.

Generational Differences

Levels of concern and comfort with use of AI are exacerbated when looking at generational differences.

Gen Z is 15%–20% less concerned than Boomers about AI uses–in autonomous vehicles and core infrastructure, for example. Similarly, Gen Z is 20–30% more comfortable than Boomers with use of AI in applications such as government benefits processing and chatbots for telehealth.

Men, Democrats, younger generations, and Black/Hispanic Americans are more comfortable than their counterparts with the use of AI for federal government benefits processing, online doctor bots, and driverless rideshare vehicles.

All demographic groups express concerns about AI. Women, older generations, higher income, and White Americans express more concern about AI than their counterparts. Gen Z is less concerned than other generations, but more than half still express concerns about AI.

Younger generations are less concerned about–and more comfortable with–using AI.

This survey was conducted by The Harris Poll on behalf of MITRE via the Harris On Demand omnibus product.

- Sample size: n=2,050

- Qualification Criteria: U.S. residents, adults ages 18+

- Mode: Online survey

- Weighting: Data weighted to ensure results are projectable to U.S. adults ages 18+

- Field Dates: November 3-7, 2022

- In tables and charts: Percentages may not add up to 100% due to weighting, computer rounding, and/or the acceptance of multiple responses.

Please contact media@mitre.org for questions or attribution.